Beyond the Buzz: A Real-Talk Guide to Fine-Tuning Your Own LLM

Ever feel like off-the-shelf AI doesn't quite get you? Let's pull back the curtain on fine-tuning. It's the secret sauce to making a large language model speak your language, and it's more accessible than you think.

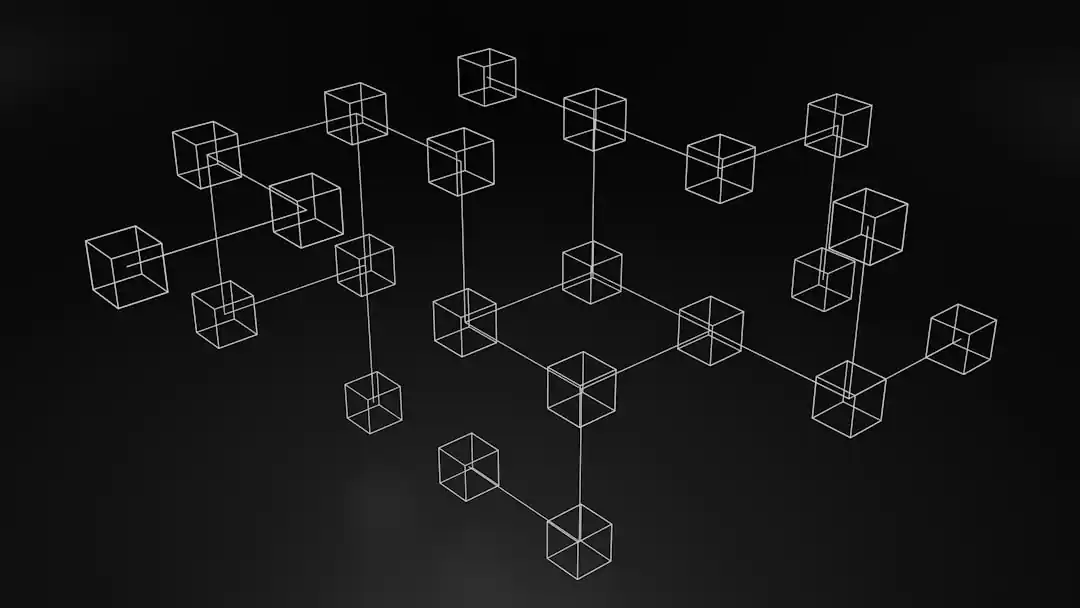

Let’s be honest. The world of large language models (LLMs) can feel a bit like a distant, futuristic city—gleaming, impressive, but maybe not built for your specific neighborhood. We see these incredibly powerful tools like GPT-4 doing amazing things, but when we try to apply them to our own niche projects, something often gets lost in translation. The model might not know our company’s jargon, the specific style of our brand, or the intricate details of our industry. It’s a generalist in a world that often demands a specialist.

I used to think that this was just the reality of working with AI. You take what you can get, and you spend hours crafting the perfect, elaborate prompt to coax the model into giving you something close to what you need. The idea of actually changing the model itself—fine-tuning it—felt like a monumental task, reserved for teams with massive budgets and access to supercomputers. It seemed like trying to add a new room to a skyscraper with just a hammer and some nails.

But the landscape has shifted, and it’s happened faster than almost anyone expected. Fine-tuning is no longer some dark art practiced only in the hallowed halls of major tech companies. Thanks to new techniques and a thriving open-source community, it’s become a practical, accessible, and frankly, game-changing process for developers, creators, and businesses who want to create truly bespoke AI experiences. It’s about turning that brilliant, generalist AI into your own in-house expert.

Why Bother? The Real Value of a Specialized Model

You might be thinking, "Is it really worth the effort?" With techniques like prompt engineering and Retrieval-Augmented Generation (RAG) getting so good, it’s a fair question. For many tasks, a well-designed prompt or a system that pulls from a knowledge base can work wonders. But fine-tuning plays a different, more fundamental role. It’s not just about giving the model new information; it’s about changing its behavior, its style, and its very understanding of a specific domain.

Imagine you’re building a customer support bot for your software company. A generic model might give technically correct but cold and robotic answers. Through fine-tuning, you can teach it your brand’s friendly and empathetic voice, train it on thousands of examples of your actual customer interactions, and make it an expert in your product’s unique features. The result is a bot that doesn't just answer questions, but represents your brand authentically. This is where fine-tuning shines—it’s about instilling a specific personality and expertise that prompting alone can’t replicate.

Furthermore, a fine-tuned model can be significantly more efficient. By specializing a smaller model, you can often achieve better performance than a much larger, general-purpose one. This translates directly to lower costs for API calls and faster response times for your users. It’s a strategic move that pays dividends in both user experience and operational cost, creating a more reliable and consistent AI that you can truly count on to perform a specific job flawlessly, time and time again.

The Toolkit: How Fine-Tuning Became Accessible

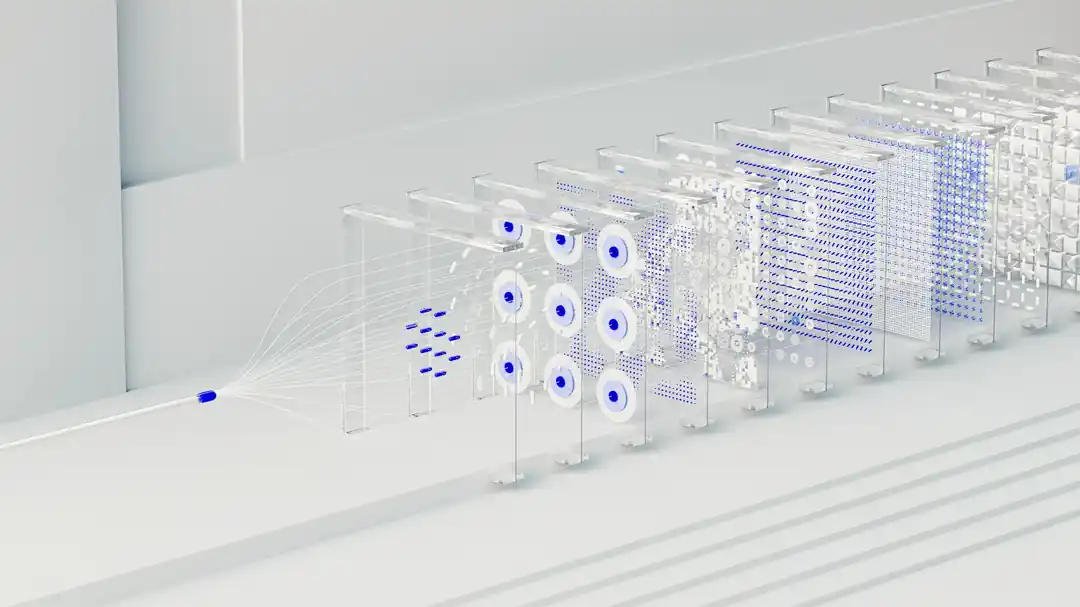

The breakthrough that really opened the doors for everyone is a set of techniques called Parameter-Efficient Fine-Tuning, or PEFT. The name sounds complex, but the idea is beautifully simple. Instead of retraining all the billions of parameters in a massive model (which is what used to be so expensive), PEFT methods find clever ways to update only a tiny fraction of them—often less than 1%. The rest of the model remains frozen, preserving its powerful foundational knowledge.

The most popular of these methods is LoRA, or Low-Rank Adaptation. To put it simply, LoRA adds a small, new set of trainable weights alongside the existing ones. During fine-tuning, only these new, tiny layers are trained. It’s like giving a seasoned chef a new spice to work with; you’re not teaching them how to cook all over again, you’re just giving them a new tool to subtly change the flavor of the dish. This approach dramatically cuts down on the required computing power, to the point where you can fine-tune powerful models on a single, consumer-grade GPU.

This efficiency is a complete game-changer. It means faster iteration, lower costs, and the ability to experiment without needing a research grant. When you’re done, you don’t have a new multi-gigabyte model to store; you just have your small LoRA "adapter" file, which might only be a few megabytes. You can have one base model and dozens of these little adapters, each one a specialist for a different task, ready to be swapped in and out as needed. It’s a modular, efficient, and incredibly powerful way to manage specialized AI.

Your Most Important Job: Curating the Perfect Dataset

If there’s one thing to take away about fine-tuning, it’s this: the model you create will only ever be as good as the data you train it on. This is where the human element is most critical. You can’t just dump a bunch of random documents on the model and hope for the best. You need to be a curator, a teacher, and a data janitor. A small, pristine dataset of a few hundred high-quality examples will almost always outperform a massive, messy, and unfocused dataset.

What does a "high-quality" example look like? It should be a clear, concise demonstration of the exact task you want the model to perform. For a chatbot, this might be a series of "prompt" and "response" pairs. For a summarization tool, it would be articles paired with their ideal summaries. The key is consistency and clarity. Your goal is to create a perfect little textbook from which the model can learn its new skill.

This process is often the most time-consuming part of fine-tuning, but it’s also the most valuable. It involves gathering relevant data, cleaning it meticulously to remove errors and inconsistencies, and formatting it perfectly. It’s not glamorous work, but it’s the foundation of everything. Rushing this step is the surest way to get a disappointing result. Investing time here will pay off tenfold in the final performance of your model.

The Journey Begins Here

Embarking on the path of fine-tuning is an incredibly rewarding experience. It’s a journey that takes you from being a passive user of AI to an active creator, shaping these powerful tools to serve your unique vision. It’s a blend of art and science—the art of curating the perfect dataset and the science of applying the right techniques to train your model effectively.

The best way to start is to start small. Pick a very specific, narrow task you want to automate or improve. Find a good open-source base model from a platform like Hugging Face. Then, pour your energy into creating that small, high-quality dataset. The tools and tutorials available today are more accessible than ever, and the community is full of people sharing their knowledge and experiences.

Don't be afraid to experiment, to fail, and to iterate. Your first attempt might not be perfect, but with each cycle of training and testing, you’ll gain a deeper intuition for how these models learn. You are teaching a new kind of mind, and in the process, you’ll learn more than you ever expected. The power to build a truly specialized AI is in your hands, and the journey is just beginning.

You might also like

The 7 Layers of Networking: A Beginner's Guide to the OSI Model

Ever wondered how your computer *actually* talks to the internet? We're breaking down the mysterious OSI model into seven simple layers that explain the magic of digital communication.

Unlocking Nigeria: Your Ultimate Guide to Finding the Best Flight Deals

Dreaming of a trip to Nigeria but the flight prices feel like a roadblock? Let's break down the real strategies for finding affordable flights and making that journey happen.

The Real Best Time to Visit Seville (If You Secretly Hate Crowds)

Dreaming of Seville's sun-drenched plazas without the endless crowds? Let's talk about when to find the city's true, tranquil soul.

Beyond the Billboard: 7 Modern Ways for Car Accident Law Firms to Win More Clients

Is your car accident law firm struggling to connect with new clients? It's time to move beyond old-school tactics. Here’s how to build a powerful client-attraction engine in the digital age.

The Second Crash: Tips for Negotiating Medical Bills After a Car Accident

A car accident is traumatic enough. Then the bills arrive. Here’s how to navigate the confusing, often overwhelming, world of medical billing and negotiate for a fair outcome.